CNN最近几年的发展 CNN,全称为Convolutional Neural Network ,中文名称卷积神经网络。

卷积神经网络(Convolutional Neural Networks, CNN)是一类包含卷积 计算且具有深度结构的前馈神经网络 (Feedforward Neural Networks),是深度学习 (deep learning)的代表算法之一 。卷积神经网络具有表征学习 (representation learning)能力,能够按其阶层结构对输入信息进行平移不变分类(shift-invariant classification),因此也被称为“平移不变人工神经网络(Shift-Invariant Artificial Neural Networks, SIANN)” 。

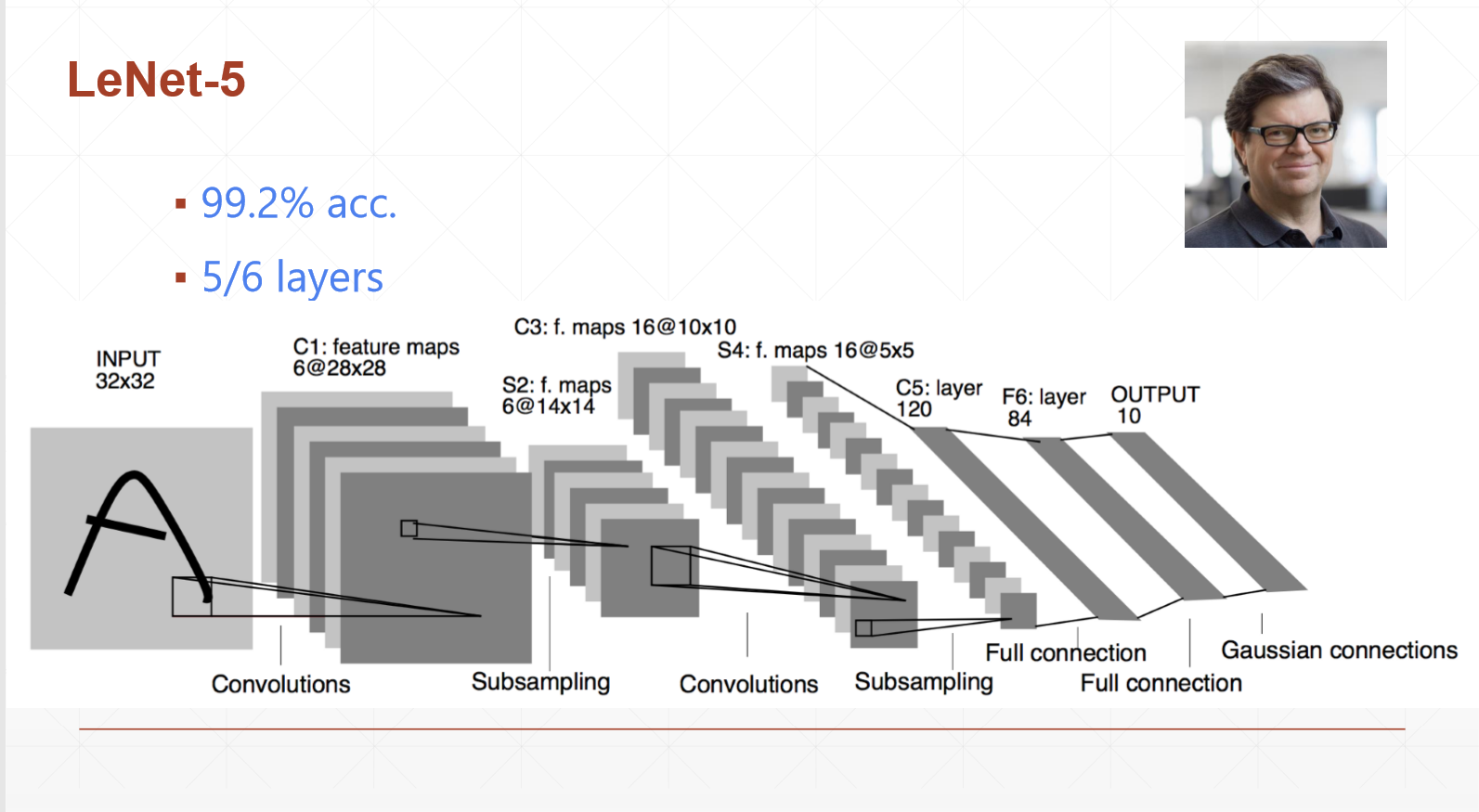

对卷积神经网络的研究始于二十世纪80至90年代,时间延迟网络和LeNet-5是最早出现的卷积神经网络 ;在二十一世纪后,随着深度学习理论的提出和数值计算设备的改进,卷积神经网络得到了快速发展,并被应用于计算机视觉 、自然语言处理 等领域 。

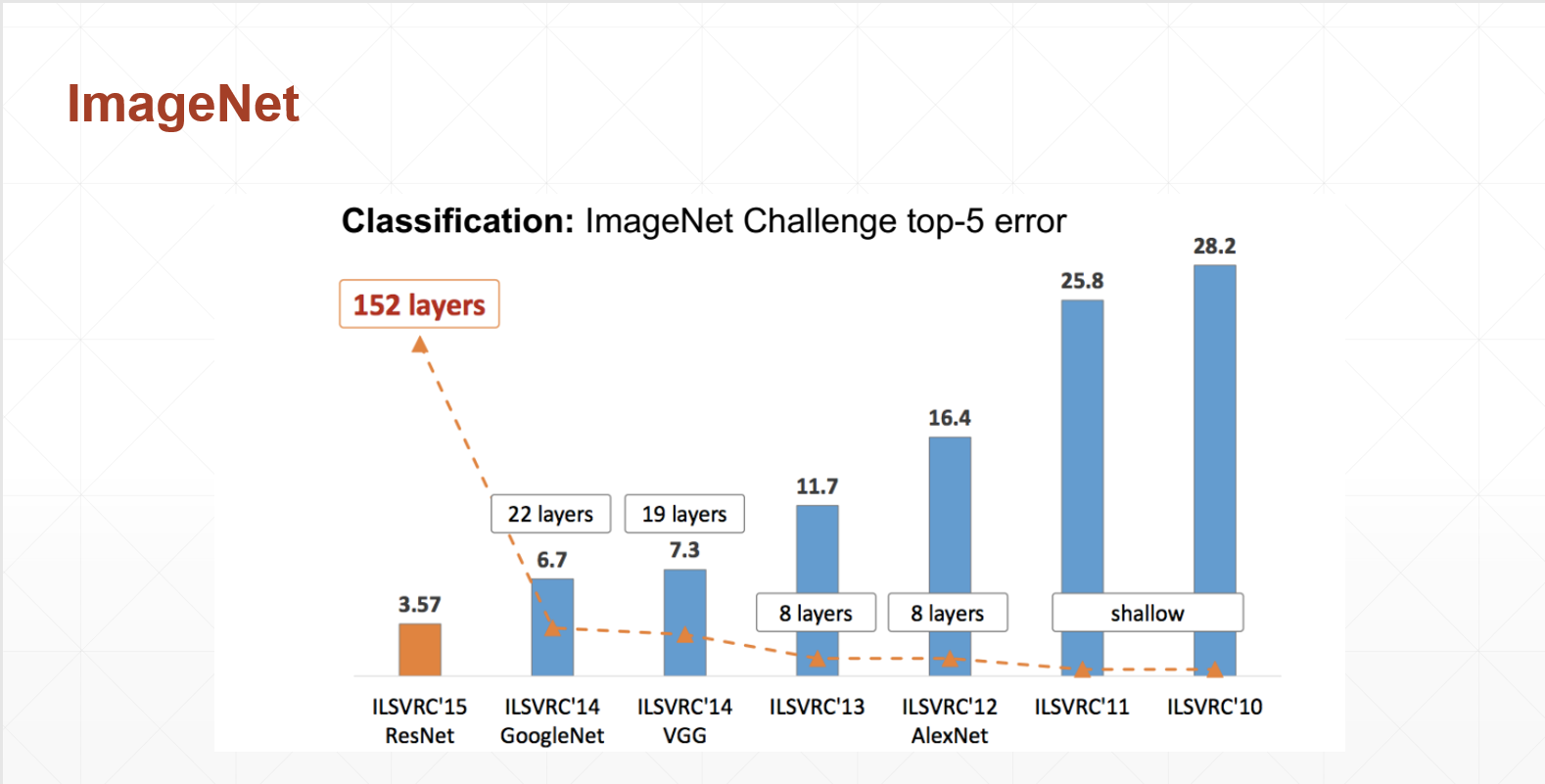

在2012年之前,ImageNet的错误率都是比较高的,直到2012年AlexNet的横空出世,将错误率一下子下降了

近10%,掀起了一股深度学习的浪潮。

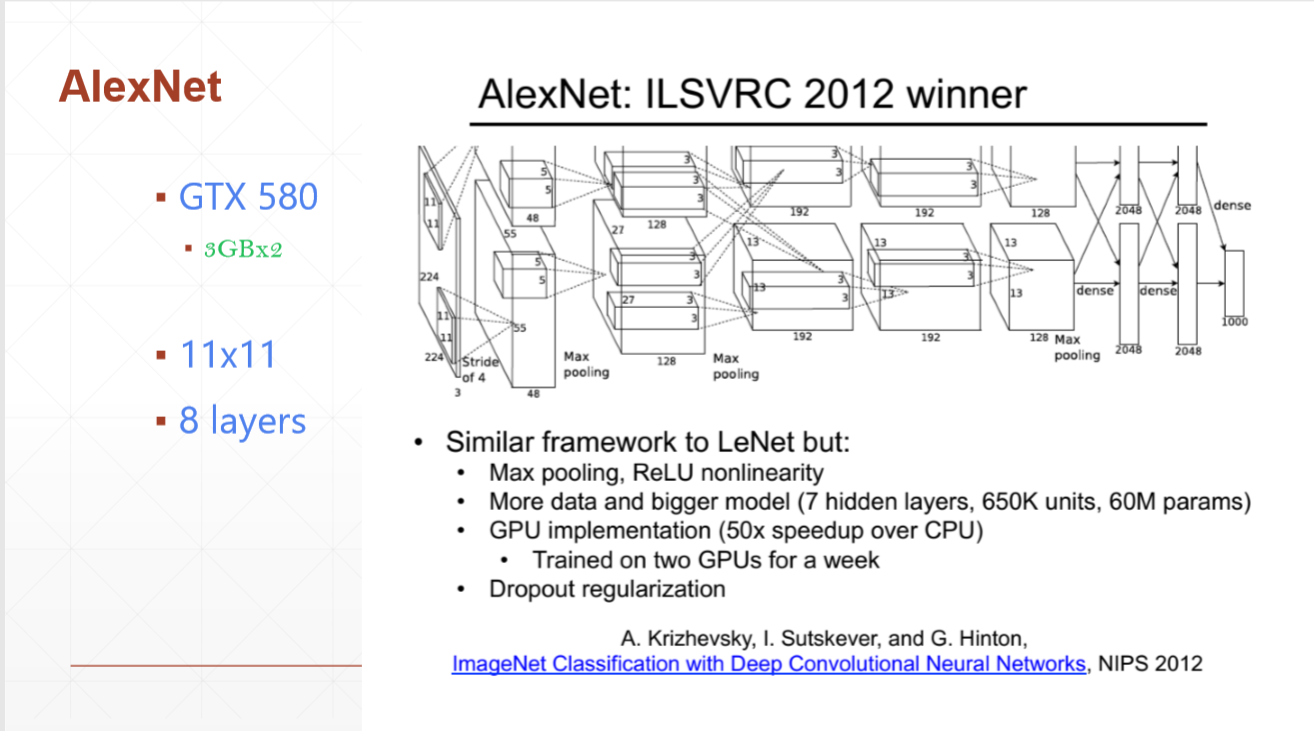

AlexNet

如图为Alexnet的网络结构,它是由八层网络构成,以11*11的kernel进行大刀阔斧的提取特征,在当时是很很好的模型,在今天看来,它的粒度太大。可以看到,当时比较先进的显卡只有3GB大小,所以用两块显卡跑。

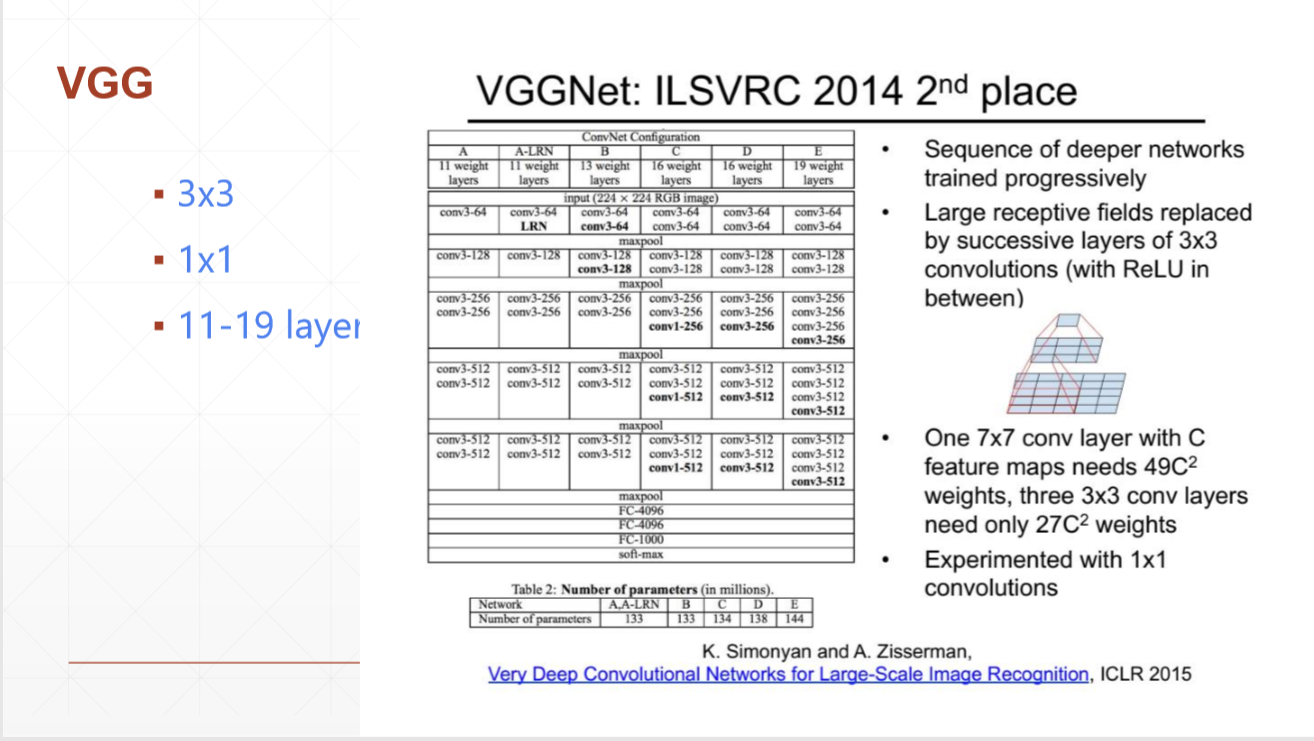

VGG

这是2014年ILSVRC的亚军VGG,相比2012年的AlexNet,error又下降了近10%。它的网络层数又加深了,从AlexNet的8层网络,变成了11-19层。Kernel大小也变得更加细致了。

GoogleNET

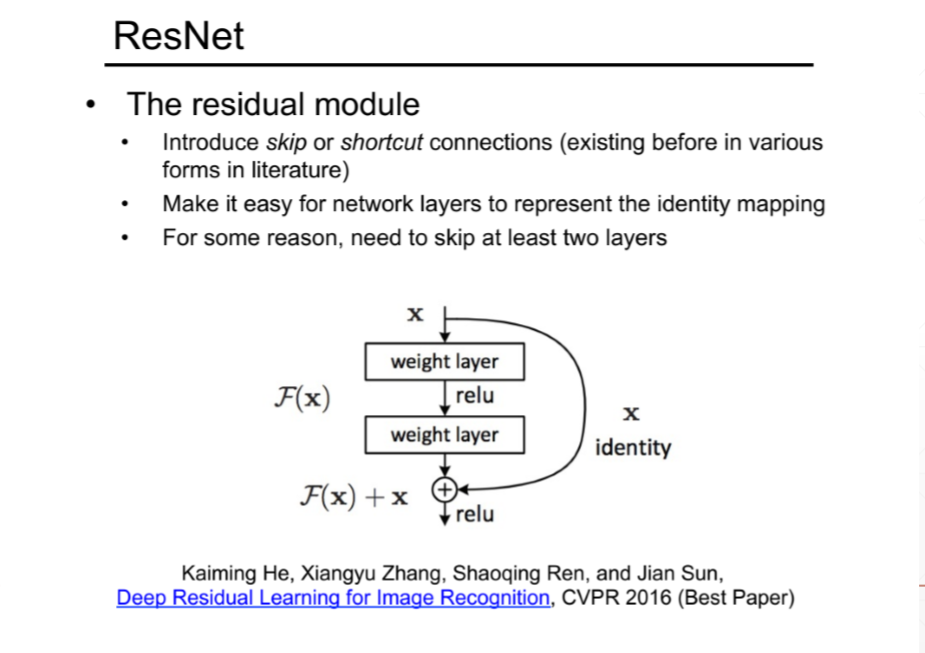

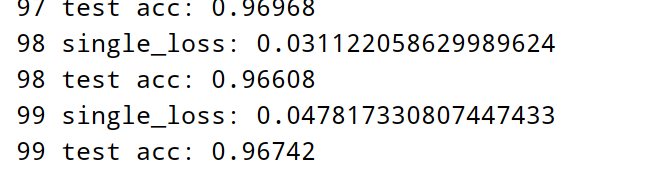

上图为2014年的冠军GoogLeNet,为了纪念1998年Yann LeCun提出的1998年LeNet5,将字母L大写。该网络模型为22层。当时人们有一个想法,是不是网络层数越多,train的效果越好呢?在经历大量的实验之后,发现并不是这样的。也就是说,并不是层数越多越好。于是中国学者何凯明(大神一位,有兴趣可以百度)在2015年提出了一种网络模型叫做残差网络ResNet。

该模型的创新点在于,它提出了一个类似于电路中“短接”的概念,将那些train效果起到negative作用的layer丢掉,从而在保证层数的情况下又保证了一个low error。

我们今天主要介绍LeNet5和ResNet。

LeNet5 LeNet5是1998年由Yann LeCun及其团队提出的,该模型在当时的手写数字识别问题中取得了成功。

该网络由2层卷积层,3层全连接层共5层网络构成。图片的大小为32*32.

lenet5.py import torchfrom torch import nnclass Lenet5 (nn.Module) : def __init__ (self) : super(Lenet5, self).__init__() self.conv_unit = nn.Sequential( nn.Conv2d(in_channels=3 ,out_channels=6 ,kernel_size=5 ,stride=1 ,padding=0 ), nn.MaxPool2d(kernel_size=2 ,stride=2 ,padding=0 ), nn.Conv2d(in_channels=6 ,out_channels=16 ,kernel_size=5 ,stride=1 ,padding=0 ), nn.MaxPool2d(kernel_size=2 ,stride=2 ,padding=0 ) ) self.fc_unit = nn.Sequential( nn.Linear(16 *5 *5 ,120 ), nn.ReLU(), nn.Linear(120 ,84 ), nn.ReLU(), nn.Linear(84 ,10 ) ) tmp = torch.randn(2 ,3 ,32 ,32 ) out = self.conv_unit(tmp) print('conv_out:' ,out.shape) def forward (self, x) : batchsz = x.size(0 ) x = self.conv_unit(x) x = x.view(batchsz,16 *5 *5 ) logits = self.fc_unit(x) return logits def main () : net = Lenet5() tmp = torch.randn(2 ,3 ,32 ,32 ) out = net(tmp) print('lenet out:' ,out.shape) if __name__ == '__main__' : main()

main.py import torchfrom torchvision import datasetsfrom torchvision import transformsfrom torch.utils.data import DataLoaderfrom torch import nn,optimfrom Lenet5 import Lenet5def main () : batchsz=128 cifar_train = datasets.CIFAR10('cifar' ,True ,transform=transforms.Compose([ transforms.Resize((32 ,32 )), transforms.ToTensor() ]),download=True ) cifar_train = DataLoader(cifar_train,batch_size=batchsz,shuffle=True ) cifar_test = datasets.CIFAR10('cifar' , True , transform=transforms.Compose([ transforms.Resize((32 ,32 )), transforms.ToTensor() ]), download=True ) cifar_test = DataLoader(cifar_test, batch_size=batchsz, shuffle=True ) x,label = iter(cifar_train).next() print('x:' ,x.shape,'label:' ,label.shape) device = torch.device('cuda' ) model = Lenet5().to(device) criteon = nn.CrossEntropyLoss().to(device) optimizer = optim.Adam(model.parameters(),lr = 1e-3 ) print(model) for epoch in range(100 ): model.train() for batchidx,(x,label) in enumerate(cifar_train): x,label = x.to(device),label.to(device) logits = model(x) loss = criteon(logits,label) optimizer.zero_grad() loss.backward() optimizer.step() print(epoch,"single_loss:" ,loss.item()) model.eval() with torch.no_grad(): total_correct = 0 total_num = 0 for x,label in cifar_test: x,label = x.to(device),label.to(device) logits = model(x) pred = logits.argmax(dim = 1 ) correct = torch.eq(pred,label).float().sum().item() total_correct += correct total_num += x.size(0 ) acc = total_correct/total_num print(epoch,'test acc:' ,acc) if __name__ == '__main__' : main()

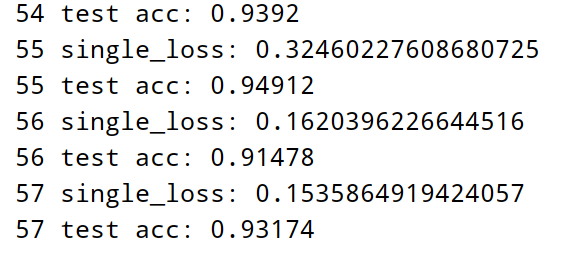

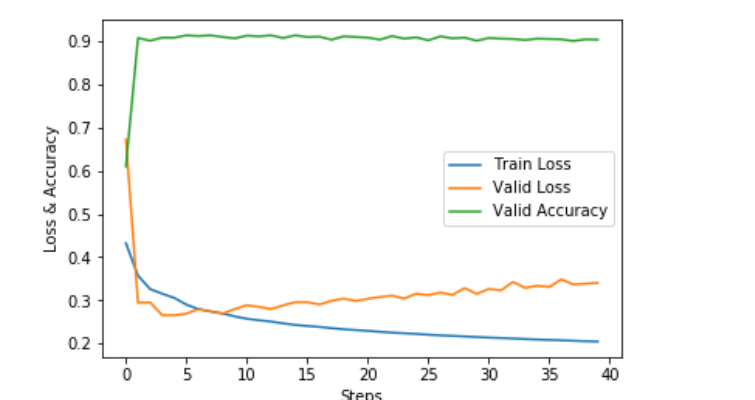

可以看到,在epoch达到50多次的时候会出现明显的震荡。

我仅仅训练了100个epoch,accuracy达到了97%左右

ResNet

以这样的”短接”操作,来保证层数和准确率。ResNet是何凯明同学在2015年的ILSVRC提出的,同时也是这届大赛的冠军。他于2016年获得CVPR的Best Paper。

ResNet.py import torchfrom torch import nnfrom torch.nn import functional as Fclass ResBlk (nn.Module) : """ resnet block """ def __init__ (self, ch_in, ch_out, stride=1 ) : """ :param ch_in: :param ch_out: """ super(ResBlk, self).__init__() self.conv1 = nn.Conv2d(ch_in, ch_out, kernel_size=3 , stride=stride, padding=1 ) self.bn1 = nn.BatchNorm2d(ch_out) self.conv2 = nn.Conv2d(ch_out, ch_out, kernel_size=3 , stride=1 , padding=1 ) self.bn2 = nn.BatchNorm2d(ch_out) self.extra = nn.Sequential() if ch_out != ch_in: self.extra = nn.Sequential( nn.Conv2d(ch_in, ch_out, kernel_size=1 , stride=stride), nn.BatchNorm2d(ch_out) ) def forward (self, x) : """ :param x: [b, ch, h, w] :return: """ out = F.relu(self.bn1(self.conv1(x))) out = self.bn2(self.conv2(out)) out = self.extra(x) + out out = F.relu(out) return out class ResNet18 (nn.Module) : def __init__ (self) : super(ResNet18, self).__init__() self.conv1 = nn.Sequential( nn.Conv2d(3 , 64 , kernel_size=3 , stride=3 , padding=0 ), nn.BatchNorm2d(64 ) ) self.blk1 = ResBlk(64 , 128 , stride=2 ) self.blk2 = ResBlk(128 , 256 , stride=2 ) self.blk3 = ResBlk(256 , 512 , stride=2 ) self.blk4 = ResBlk(512 , 512 , stride=2 ) self.outlayer = nn.Linear(512 *1 *1 , 10 ) def forward (self, x) : """ :param x: :return: """ x = F.relu(self.conv1(x)) x = self.blk1(x) x = self.blk2(x) x = self.blk3(x) x = self.blk4(x) x = F.adaptive_avg_pool2d(x, [1 , 1 ]) x = x.view(x.size(0 ), -1 ) x = self.outlayer(x) return x def main () : blk = ResBlk(64 , 128 , stride=4 ) tmp = torch.randn(2 , 64 , 32 , 32 ) out = blk(tmp) print('block:' , out.shape) x = torch.randn(2 , 3 , 32 , 32 ) model = ResNet18() out = model(x) print('resnet:' , out.shape) if __name__ == '__main__' : main()

import torchfrom torch.utils.data import DataLoaderfrom torchvision import datasetsfrom torchvision import transformsfrom torch import nn, optimfrom lenet5 import Lenet5from resnet import ResNet18def main () : batchsz = 128 cifar_train = datasets.CIFAR10('cifar' , True , transform=transforms.Compose([ transforms.Resize((32 , 32 )), transforms.ToTensor(), transforms.Normalize(mean=[0.485 , 0.456 , 0.406 ], std=[0.229 , 0.224 , 0.225 ]) ]), download=True ) cifar_train = DataLoader(cifar_train, batch_size=batchsz, shuffle=True ) cifar_test = datasets.CIFAR10('cifar' , False , transform=transforms.Compose([ transforms.Resize((32 , 32 )), transforms.ToTensor(), transforms.Normalize(mean=[0.485 , 0.456 , 0.406 ], std=[0.229 , 0.224 , 0.225 ]) ]), download=True ) cifar_test = DataLoader(cifar_test, batch_size=batchsz, shuffle=True ) x, label = iter(cifar_train).next() print('x:' , x.shape, 'label:' , label.shape) device = torch.device('cuda' ) model = ResNet18().to(device) criteon = nn.CrossEntropyLoss().to(device) optimizer = optim.Adam(model.parameters(), lr=1e-3 ) print(model) for epoch in range(1000 ): model.train() for batchidx, (x, label) in enumerate(cifar_train): x, label = x.to(device), label.to(device) logits = model(x) loss = criteon(logits, label) optimizer.zero_grad() loss.backward() optimizer.step() print(epoch, 'loss:' , loss.item()) model.eval() with torch.no_grad(): total_correct = 0 total_num = 0 for x, label in cifar_test: x, label = x.to(device), label.to(device) logits = model(x) pred = logits.argmax(dim=1 ) correct = torch.eq(pred, label).float().sum().item() total_correct += correct total_num += x.size(0 ) acc = total_correct / total_num print(epoch, 'test acc:' , acc) if __name__ == '__main__' : main()

可以看到,ResNet的main文件和LeNet5的main文件相差不多。这说明,我门可以一套模板来训练不同的模型。实际应用中还可以加入一些工程技巧,比如数据增强操作,图片的旋转角度不宜太大,在-15°到15°为宜,角度太大,经实验证明,效果并不好。